So, are AI detectors accurate? The honest answer is... it's complicated.

Under perfect lab conditions, analyzing raw, unedited text straight from a model like ChatGPT, they can do a decent job. But their reliability takes a nosedive in the real world. As soon as a human touches that text—editing, paraphrasing, or mixing it with their own writing—accuracy becomes a huge question mark.

A Realistic Look at AI Detection

To get a real sense of AI detector accuracy, we have to look past a simple "yes" or "no." The performance of these tools isn't a fixed number; it's a moving target that shifts depending on what you feed them.

Think of an AI detector as a rookie detective trained to spot counterfeit cash. It knows the classic signs of a fake: the wrong texture, predictable serial numbers, a certain lack of "feel." When analyzing a fresh, untouched piece of AI text, the detector often spots these tells easily. The machine's fingerprints—like overly predictable sentence structures and a certain rhythmic uniformity—are all over it.

But the moment a human writer steps in to edit that text, things get messy. Once they start rewriting sentences, adding personal stories, or tweaking the flow, those obvious clues vanish. The text starts to feel less like a machine-made copy and much more like the real thing.

The Problem with False Flags

This brings us to the two most critical (and problematic) outcomes in AI detection:

- False Positives: This is when a detector wrongly flags human-written content as being AI-generated. This is by far the most dangerous error, putting students, writers, and professionals at risk of false accusations.

- False Negatives: This happens when a detector fails to spot AI-generated text, letting it pass as human work. While less harmful to an individual's reputation, this completely undermines the tool's entire purpose.

The real challenge here is that even a tiny error rate can cause massive problems. A detector with a 1-2% false positive rate might sound great on paper. But for a university with 10,000 students, that could still mean 100 to 200 false accusations every time a major paper is due.

What Independent Studies Reveal

Don't just take our word for it. Independent research consistently highlights these limitations.

A study from the University of Maryland found that when text from non-native English speakers was analyzed, detectors frequently misclassified it as AI-generated. Their unique sentence structures and grammatical patterns were enough to trigger the alarms.

Another study published in Patterns00236-4) tested seven popular AI detectors and concluded that "none of the detectors… performed with high accuracy and reliability." They found that simple paraphrasing was often enough to trick the tools.

This table gives a clearer picture of how accuracy can vary wildly depending on the type of text being scanned.

AI Detector Performance at a Glance

| Content Type | Typical Accuracy | Risk of Being Wrong |

|---|---|---|

| Raw, Unedited AI Text | High (85-95%+) | Low risk of false negatives. |

| Human-Edited AI Text | Moderate to Low | High risk of false negatives. Edits mask AI patterns. |

| Paraphrased AI Text | Very Low | Highest risk of false negatives. Tools often fail completely. |

| Non-Native English Writing | Highly Unreliable | Very high risk of false positives. Can penalize writers. |

| Creative or Complex Topics | Unpredictable | Mixed risk. AI struggles with nuance, but so do detectors. |

As you can see, the moment the text strays from the simple, unedited AI output these tools were trained on, their performance starts to crumble.

Setting Realistic Expectations

Because of all this, relying on a single AI detector score is a deeply flawed approach. Big names like Turnitin and Copyleaks are pretty good at spotting pure AI text, but they often struggle as soon as someone tries to evade detection. The data shows that even minor manual edits can make detection scores plummet.

This context is everything. The question isn't just, "Are AI detectors accurate?" The better question is, "How accurate are they for this specific piece of text under these specific conditions?" The answer depends on the AI model used, the topic's complexity, the text's length, and most importantly, how much a human was involved. This reality has to shape how we interpret their results—not as a final verdict, but as just one clue in a much bigger investigation.

How AI Content Detectors Actually Work

To get a real sense of whether AI detectors are reliable, we need to peek under the hood and see what they're actually looking for. Think of an AI detector less like a reader and more like a "linguistic detective." It's not trying to understand the meaning of the words; it's hunting for the subtle statistical fingerprints that machines tend to leave behind.

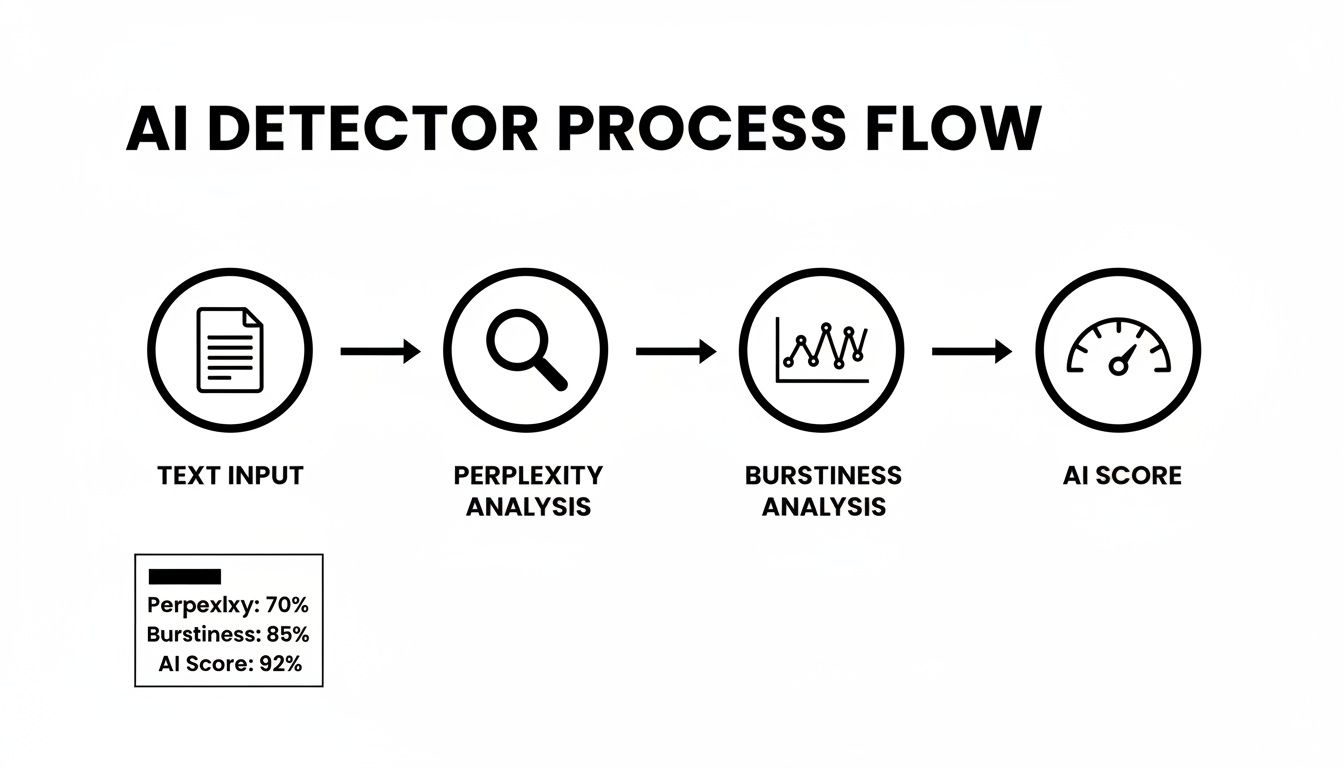

This detective work boils down to two key concepts: perplexity and burstiness. Once you get your head around these two ideas, you'll see exactly why these tools can be both surprisingly clever and easily fooled.

The Predictability Test: Perplexity

At its core, perplexity is just a fancy term for how predictable a text is. Imagine you see the sentence, "The dog wagged its ___." Your brain instantly fills in "tail." The next word is obvious, expected. That’s a sign of low perplexity.

AI models, especially the earlier versions, are trained to be professional word-guessers. They always pick the most statistically probable next word, which results in writing that's smooth and logical but often incredibly predictable. Human writing, on the other hand, is messier and more creative. We throw in odd metaphors, choose less common words, and structure our thoughts in ways an algorithm wouldn't expect.

A low perplexity score is a huge red flag for an AI detector. It's a strong hint that the text is playing it safe, following a predictable path just like a machine would. Our human creativity naturally leads to higher perplexity—we're simply less predictable.

So, when a detector scans a document, it’s constantly asking, "How surprised am I by this next word?" If the answer is consistently "not very," its suspicion of AI involvement starts to climb.

The Rhythm of Human Writing: Burstiness

The second major clue is burstiness, which is all about the rhythm and flow of the sentences. When you talk, you naturally vary your pacing. You might use a short, sharp sentence for emphasis, followed by a longer one that meanders and adds detail. That natural variation is burstiness.

AI-generated text often struggles with this. It tends to fall into a monotonous rhythm, with sentences that are all roughly the same length and structure. The writing feels flat because it lacks the energetic "bursts" of complexity followed by moments of simplicity. It's just too consistent, too perfect.

A detector looks at this flow and checks for:

- A healthy mix of short, medium, and long sentences.

- Different grammatical structures from one sentence to the next.

- The absence of a repetitive, almost robotic cadence.

Tools like the one from Copyleaks can even visualize this process, often highlighting the specific sentences that fit a machine-like pattern.

The parts of the text that get flagged usually have both low perplexity and low burstiness, signaling a potential AI origin.

Putting the Clues Together

An AI content detector isn’t just looking for one of these signals; it combines them to make a judgment. It's searching for a pattern. When a text has both highly predictable word choices (low perplexity) and a monotonous, even rhythm (low burstiness), the detector's confidence that it’s looking at AI-generated content skyrockets.

This is also why these tools aren't foolproof. When a human edits AI-generated text, they instinctively start breaking these patterns. They swap out a boring, predictable word for a more creative one (increasing perplexity) and chop up or combine sentences to make the flow more natural (increasing burstiness). That human touch effectively smudges the very fingerprints the linguistic detective was trained to find, and it's why a simple score can never tell you the full story.

Decoding the Real Data Behind Accuracy Claims

AI detection companies love to advertise near-perfect accuracy, but when you look past the bold marketing claims, independent research tells a much messier story. Let's move from theory to hard evidence and see how these tools actually perform in the real world.

A detector can easily boast 99% accuracy when analyzing a clean, specific dataset where the lines between human and AI text are perfectly clear. But those lab-like conditions almost never happen in practice. The moment that text gets paraphrased, edited, or mixed with a bit of human writing, those impressive accuracy scores start to crumble.

Marketing Hype Versus Independent Studies

The gap between what's advertised and what actually happens is huge. While a tool's homepage might promise you reliable detection, academic studies and independent tests consistently find major holes in their capabilities. This isn't just a tiny difference—it’s a fundamental problem with how they work.

What’s worse, many of the free or lesser-known tools you’d find with a quick search perform terribly even under ideal conditions. Some tests show they barely function at all, which really drives home the risk of trusting the first tool you find without checking its credibility.

A critical takeaway from multiple studies is that a high detection rate for pure AI text means very little on its own. A tool that flags everything as AI would technically have a 100% detection rate for AI content, but it would be completely useless due to its astronomical number of false positives.

The Achilles' Heel: Simple Paraphrasing

The biggest weakness for most detectors? Simple editing. A recent academic study that pitted 250 human articles against 750 texts from ChatGPT exposed just how flawed these tools are.

Sure, a tool like ZeroGPT could tell the difference between raw human writing (averaging a 27.55% AI likelihood) and raw GPT-4o output (94.35% likelihood). But the second a paraphrasing tool touched the text, the scores collapsed. In one trial, a simple rephrasing tool slashed a document's AI score from 99.52% down to a shocking 0.02%. You can dig into the full results in this in-depth AI detector accuracy research.

This process is exactly why they struggle.

As you can see, detectors are trained to spot predictable patterns. Human editing or a good paraphrasing tool effectively scrambles those signals, making an accurate judgment nearly impossible.

The table below gives you a quick snapshot of how the big names in AI detection stack up when their marketing claims are put to the test.

Advertised vs. Actual Accuracy of Leading AI Detectors

| AI Detector | Advertised Accuracy (Ideal Scenarios) | Observed Accuracy (Real-World Use) | Key Weakness |

|---|---|---|---|

| Turnitin | Focuses on low false positives | 1-20% false positive rate; struggles with edited text | Optimized for academia, but easily confused by paraphrasing. |

| ZeroGPT | Up to 99% | Accuracy drops over 50% with paraphrasing | Very sensitive to basic editing and "humanizing" tools. |

| OpenAI's Detector | N/A (Discontinued) | Correctly identified only 26% of AI text | Highlighted the core difficulty of detection, even for AI creators. |

| Various Tools | 95-99% common claims | Often fail to detect paraphrased content at all (0-10% AI score) | Inconsistent results and high susceptibility to simple evasion tactics. |

As the numbers show, real-world performance is a far cry from the polished figures you see on their websites. Once human intervention comes into play, reliability plummets.

Inconsistencies From Tool to Tool

Another massive headache is the lack of consistency. If you run the exact same text through three different detectors, you’ll likely get three wildly different results. One might flag it as 100% human, another might say it's 80% AI, and a third could land somewhere in the middle.

This puts you in a tough spot, essentially forcing you to guess which one is right. It’s why relying on a single detector's score is such a risky move.

- Turnitin: Well-known in education for trying to keep false positives low, but its detection rate still takes a nosedive against edited text. Studies show it can still produce false positives at a rate of 1-20%.

- OpenAI's Detector: The now-defunct tool from OpenAI itself was a case study in this challenge. It only correctly identified 26% of AI text while wrongly flagging 9% of human writing as AI-generated.

- Other Tools: Many other popular detectors show the same weaknesses, with accuracy dropping by over 50% after just a little paraphrasing.

These figures prove that while the technology sounds impressive, its practical application is far from reliable. The data shows that a determined user can almost always bypass detection with minimal effort, and innocent writers can easily get caught in the crossfire. An effective humanizer like Top Humanizer works by reintroducing the linguistic complexity that these detectors are unable to parse, ensuring your text reads naturally and avoids false flags.

What Really Influences AI Detector Accuracy?

The accuracy of an AI detector isn't a fixed, reliable number like a car's top speed. It’s a moving target, and its performance can swing wildly depending on what you feed it. Getting a handle on these variables is key to understanding why your writing might get flagged, sometimes when you least expect it.

These tools aren't reading your work for meaning or nuance. They're pattern-matching machines, hunting for the statistical fingerprints left behind by algorithms. When those patterns are obvious, the detector does its job well. But when the trail is faint, mixed, or intentionally covered up, the results get shaky.

Text Length Matters—A Lot

One of the biggest levers on accuracy is simply how much text you give the detector to analyze. To get a good read on linguistic patterns like perplexity and burstiness, these tools need a decent sample size.

- Short and Shaky: Got a short email or a single paragraph under 200 words? There’s just not enough data for a confident call. The statistical signals are too weak, which means the chances of a mistake—either a false positive or a false negative—shoot way up.

- Long and Clear: On the other hand, longer documents like articles or essays (think 500 words or more) give the detector a much richer dataset. With more text, the consistent, often predictable rhythms of AI-generated content become much easier to spot.

It's like trying to identify a musician from a single note versus a full song. A single note is a guess, but a whole song reveals their unique style, tempo, and signature patterns.

The Art of the Prompt

How the AI was instructed to write the text in the first place has a massive impact. A basic, lazy prompt usually spits out content that’s a dead giveaway for detectors because it’s loaded with generic phrases and cookie-cutter sentence structures.

But a clever user can throw a wrench in the works. Crafting a sophisticated prompt—like asking the AI to write "in the style of a 19th-century novelist" or "using only short, punchy sentences"—forces the model out of its comfort zone. This creates text that looks and feels different from its typical output, making it much harder to flag.

Human Editing: The Ultimate Disguise

Of all the factors, one stands out as the most powerful: human editing. The moment a person steps in to tweak, rephrase, or add their own flair to AI-generated text, a detector's ability to spot it drops off a cliff.

Why? Because human editors naturally inject the very randomness and personality that AI models lack.

By rewriting a clunky sentence for better flow, adding a personal story, or just varying the rhythm of the paragraphs, an editor smudges the AI's statistical fingerprints. This process naturally boosts the text’s perplexity and burstiness, making it read as far more human to an algorithm.

A study from Originality AI really put a spotlight on this. The tool was an impressive 96% accurate at identifying raw, untouched AI writing. But the research also revealed its blind spots. The detector had a 2% false positive rate (incorrectly flagging human work) and a 4% false negative rate, which jumped up when it analyzed AI content that had been edited by a person. You can dig into the findings on AI detector performance to see the full breakdown.

At the end of the day, a raw piece of AI text is an easy target. But a piece that has been thoughtfully guided and refined by a human hand? That's a much tougher challenge, and it's one that today's detectors often can't handle. This is precisely why a score from one of these tools should always be a starting point for investigation, never the final word.

Navigating the High Stakes of False Positives

Forget the technical jargon for a moment. Beyond all the talk of perplexity and burstiness, there's a much more pressing question: are AI detectors accurate enough to be trusted when someone's future is on the line? A tiny error margin might look fine in a lab, but out in the real world, a single false positive can be catastrophic.

Imagine a student pouring weeks into a research paper, only to have it flagged as AI-generated by a flawed tool. Instantly, they're facing accusations of cheating, a failing grade, or even expulsion. The burden of proof unfairly shifts to them, forcing them to defend their own work against a machine's cold, algorithmic judgment.

This isn’t just a hypothetical. Professional writers have lost clients and had their reputations damaged by false flags. The emotional and career damage from that kind of accusation is real, creating a climate of fear and undermining trust. When an automated system can ruin a person’s credibility with one wrong click, the stakes are incredibly high.

This image perfectly captures the stress and helplessness of being wrongly accused by an AI detector. That "FLAGGED" stamp is an accusation that can feel impossible to fight back against, derailing academic and professional lives.

The Numbers Behind the Risk

Even the best tools on the market admit to a margin of error. Take GPTZero, for example. It claims an impressive 99% accuracy when differentiating between texts that are 100% AI and 100% human. But even they acknowledge a 1-2% false positive rate. This means for every 100 human-written documents it scans, one or two could be wrongly flagged.

At the scale of a large university processing thousands of papers, a 1-2% false positive rate isn't a minor glitch—it's a recipe for hundreds of false accusations. It translates to real students facing real consequences due to a statistical rounding error.

This is exactly why understanding the limitations is so important. While GPTZero is one of the better performers, no tool is infallible. Independent studies have confirmed its strong performance, but also show how easily accuracy can crumble as AI models get more advanced. Simple paraphrasing, for instance, has been shown to cause detection accuracy to drop by over 50% in some tests. You can read more about the benchmarks and accuracy data of AI detectors.

An Imperfect Science with Real Victims

The heart of the problem is that AI detection isn't a hard science; it's a game of probabilities. These tools work by spotting linguistic patterns that are typical of AI. But those same patterns can sometimes overlap with perfectly normal human writing styles.

This is especially true for:

- Non-native English speakers: Their sentence structures might be more formulaic, unintentionally triggering AI flags.

- Technical writers: Straightforward, descriptive content often lacks the creative "burstiness" that detectors expect from humans.

- Developing writers: Students who are still learning to write formally often produce text that seems overly predictable to an algorithm.

At the end of the day, these tools are making an educated guess, not delivering a verdict. When teachers or employers treat a high AI score as concrete proof of misconduct, they are putting innocent people at risk. This is why it's ethically crucial to use AI detectors as investigative aids—not as judge, jury, and executioner. Human oversight, critical thinking, and a simple conversation have to be the final steps in upholding academic and professional integrity.

How to Use AI Writing Tools Responsibly

Given how unpredictable AI detectors can be and the serious consequences of a false flag, the smartest move is to avoid setting them off to begin with. This doesn’t mean ditching AI writing tools altogether. It just means adopting a workflow that treats AI as an assistant, not a ghostwriter.

The idea is to use these tools to amplify your own abilities—to help with research, brainstorm ideas, or smooth out a rough draft—without ever handing over the reins of your critical thinking. When you preserve your unique voice and intellectual ownership, you naturally create work that detectors are far less likely to question.

Adopt an AI Collaboration Workflow

Imagine AI as a really smart intern. You'd never let an intern publish a final report with your name on it without you reviewing, editing, and adding your own expertise. The same logic applies here. A responsible workflow always keeps you in the driver's seat.

This approach doesn't just lower your detection risk; it leads to better, more authentic work. It's a win-win, helping you build your own skills while using technology intelligently.

An AI collaboration model treats the AI as a starting point, never the final product. The core ideas, the critical analysis, and the final words must come from you. This is the single most effective way to ensure your work is both original and undetectable.

The steps below provide a simple framework for working with AI ethically and effectively. Following this method helps you maintain your authentic voice from start to finish.

A Practical Framework for Responsible AI Use

Here's how to integrate AI into your writing process without losing your personal touch.

Use AI for Ideation and Structure: Start by asking an AI to help you brainstorm topics, explore different angles, or create a basic outline. You can have it generate research questions or summarize a dense article. This gets the ball rolling before you’ve written a single word of the final piece.

Generate a Rough First Draft (Optional): If you're really stuck on a blank page, you can ask the AI to generate a very rough draft. The key is to treat this output as raw material—a block of clay you need to sculpt, not a finished statue. It’s a resource, not a replacement for your own writing.

Perform a Heavy Human Edit: This is the most important step. Go through the AI-generated text and rewrite it from the ground up in your own voice. Tweak sentence structures, swap out generic phrases for your own vocabulary, and add personal stories or unique insights. The goal isn't just to paraphrase; it's to completely transform the content.

Fact-Check and Add Your Expertise: AI models are known for "hallucinations"—making things up with complete confidence. Never trust AI-generated information without verifying it. Check every statistic, claim, and source. Even more importantly, weave in your own analysis and perspective to show you truly understand the subject.

Refine and Polish: Read the finished draft out loud. This helps you catch any clunky phrasing or unnatural rhythms. Does it sound like something you would actually say? This final pass ensures the text has the natural variation and flow that AI detectors associate with human writing.

When you follow this process, the accuracy of AI detectors becomes much less of a worry. Your final piece is a true blend of human intellect and machine assistance—an authentic work that is undeniably yours.

A Few Lingering Questions About AI Detector Accuracy

Even with all the data and studies, a few common questions always seem to pop up. Let's tackle them head-on to clear up any confusion about how these tools actually perform in the real world.

Can Any AI Detector Be 100 Percent Accurate?

In a word, no. We're not even close to 100% accuracy, and we probably never will be. Think about it: AI models are a moving target, constantly learning and evolving their writing style. At the same time, human writing is incredibly diverse and unpredictable.

Trying to build a model that perfectly separates the two is like trying to catch smoke with a net. There will always be a margin of error, which means you’ll always have to deal with the risk of false positives (human work flagged as AI) and false negatives (AI work that slips through).

Does Editing AI Content Help Avoid Detection?

It sure does, but it has to be real editing. Just swapping out a few words with a thesaurus or running it through a cheap "spinner" tool won't fool the better detectors. They’re smart enough to see past those superficial changes.

To truly make AI content your own—and invisible to detectors—you need to break it down and rebuild it. That means rewriting sentences from scratch, shuffling the order of your ideas, and mixing up your sentence lengths. Most importantly, you need to inject your own perspective, stories, and voice. That’s what brings back the human fingerprint.

Should I Trust a Score From a Single AI Detector?

Relying on a single tool is a gamble. As we've seen from multiple studies, you can feed the same article to three different detectors and get three wildly different results. One might scream 90% AI, while another gives it an all-clear.

The best practice is to triangulate your results. Run your text through two or three of the top detectors. If you're consistently getting a "human" score across the board, you can feel much more confident that you're in the clear.

Are AI Detectors Biased Against Certain Writers?

Unfortunately, yes. This is one of the biggest ethical minefields with this technology. A growing body of research shows that detectors are far more likely to flag writing from non-native English speakers as AI-generated.

The bias creeps in because the writing patterns of someone learning English—simpler sentence structures, more common vocabulary—can look a lot like the predictable, statistically-driven text that AI produces. This is a serious flaw that can unfairly penalize people simply for their language background.

When you need to be certain your AI-assisted work reads as genuinely human and sails past detectors, a tool like Top Humanizer can be a lifesaver. It helps you refine your text to avoid flags from systems like Turnitin and GPTZero without losing your core message. Try Top Humanizer today to protect your work and maintain your credibility.

January 29, 2026

January 29, 2026

January 29, 2026

January 29, 2026

January 28, 2026

January 28, 2026