Missing Context Triggers Search Engine Doubt

AI-generated articles drop out of top-20 search results within 2–4 weeks because they lack specific context.

When web pages miss details tied to their target query, audience, or current information, Google’s systems—including Helpful Content and link spam algorithms—flag them as low quality.

This lowers organic ranking, especially for DA 10–30 sites with weaker trust signals.

Tailoring content to searcher intent and current context increases rankings in Google’s pipeline.

Forgetting Local or Topical Details

Omitting regional data or discipline-specific references marks content as thin and generic under Google’s classifiers.

Not mentioning state-specific regulations in small business law, for example, drops relevance scores.

Including local statistics, industry terms, and recent developments signals authority and lifts both rankings and click-through rates.

Over-reliance on Generalizations

Generic statements without industry examples or data mark content as low expertise and authority.

Google’s Quality Rater Guidelines push against shallow material that lacks actionable detail.

Content stuck at the general level, without direct tactics or examples, gets indexed but not ranked for competitive queries.

Misrepresenting Authority Sources

AI-generated content that invents or misattributes expert sources gets flagged by Google’s spam detection.

This triggers manual actions and algorithmic demotion.

Only use reputable, current publications and clearly state author credentials to maximize E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness).

Neglecting Up-to-Date Data

Using outdated statistics or unverifiable claims triggers automated trust checks like Knowledge Graph and Fact Check, which then downgrade your page.

Updating facts and using the most current data keeps your content relevant and helps sustain rankings.

Unnatural Language Patterns That Flag Automation

Machine-generated patterns cause algorithms to mark content as unhelpful or fake.

Google’s detection systems, like SpamBrain AI, target unnatural writing and quickly cut visibility—often within one indexing cycle (2–4 weeks).

Sites with these issues lose impressions and clicks, as seen in Google Search Console.

Repeating Key Phrases Too Precisely

Repeating exact-match keywords at high density (over 2–3% per 500 words) flags content for Google’s algorithms, like the link spam system.

Paraphrasing and varying language directly counter keyword stuffing filters.

Most guides suggest simply reducing keyword frequency, but this approach also emphasizes semantic diversity to help content rank for related queries.

Awkward Sentence Structure Patterns

Monotonous sentence length or repeated syntax gets caught by systems trained on natural writing.

Google’s algorithms penalize blocks lacking variety, so switching sentence structure throughout is critical to evade detection.

Overuse of Stop Words or Transitional Phrases

Too many transitional phrases (“however,” “in conclusion,” etc.) at rates uncommon in native writing raise flags.

Keeping transitions under 1–2 per paragraph matches standard human style and reduces detection risk.

Mismatched Reading Level

A readability score far from audience expectations (elementary for technical, or vice versa) often signals AI authorship to Google’s quality filters.

Targeting an 8th–12th grade level for professional topics maintains algorithmic trust.

Surface Edits That Fail Deeper Detection Checks

Surface-level edits to AI-generated content fail to break underlying automation patterns.

This undermines credibility and weakens organic search results as detection systems grow more advanced.

Swapping Synonyms Without Restructuring

Replacing words with synonyms, while leaving sentence structure intact, does not hide AI origins.

Detection platforms like GPTZero and Originality.ai focus on syntax, clause structure, and sentence rhythm—not just vocabulary.

Content with simple swaps (such as changing “quick” to “rapid”) keeps AI-patterned negation and clause order, which stand out to detection tools.

As detailed in How AI detectors interpret sentence structure, these platforms analyze deeper linguistic patterns rather than just vocabulary.

These edits often create awkward syntax, making detection easier.

To avoid this, restructure or combine sentences to break the expected patterns.

Word replacement alone fails.

Manual Edits That Ignore Paragraph Flow

Editing only at the sentence level, without improving paragraph rhythm, leaves LLM signatures—abrupt transitions and poor logical flow.

Detection systems and editorial guidelines flag paragraphs lacking coherence or progression, which signals AI origin when only local changes are made.

Sites in the DA 10–30 range routinely fail to rank when this weakness remains.

Search systems recognize and discount content that lacks clear, human-like idea development.

Brushing Over Factual Consistency

Minimal edits miss fact mismatches—a known problem in AI outputs.

If you change a statistic or name without updating all related references in nearby text, internal contradictions persist.

Google’s link spam system and human raters check for internal consistency, and conflicting details push content down in rankings within weeks.

Consistent cross-referencing at article and site level keeps authority signals intact.

Retaining Template Structures

Leaving standard AI frameworks—formulaic intros, repeated bullet lists, and stock calls-to-action—signals automation.

Anti-AI detectors identify repetitive formatting, generic transitions, and overused summaries.

For organic search, keeping these templates leads to lost impressions after indexing, as Google’s systems compare structure across the site.

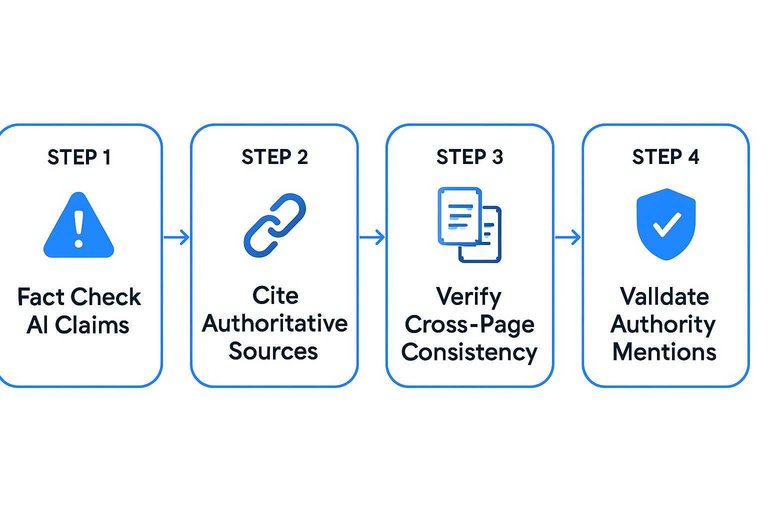

Fact-Checking Shortcuts That Risk Penalties

Publishing AI-generated claims without verification exposes errors both publicly and algorithmically.

This erodes user trust and reduces search visibility.

Strong fact-checking and attribution are mandatory for competitive rankings, especially in YMYL (Your Money Your Life) sectors where scrutiny is highest.

Blindly Trusting AI Fact Claims

Publishing AI-derived facts unchecked spreads fabricated or outdated information, a proven risk in LLM output when data is incomplete.

Google’s knowledge graph and third-party fact-checkers downgrade or flag these pages within weeks.

Every numerical or historical claim needs validation against recognized sources.

Ignoring Source Traceability

Omitting citations for data or expert analysis undermines trust and prompts content downgrades in both algorithm and manual reviews.

AI outputs without visible attribution are deprioritized, as seen in health, tech, and finance.

Back each significant claim with a direct link to an authoritative, original source.

Overlooking Cross-Page Contradictions

When AI updates spread across multiple pages, inconsistencies in stats, terms, or conclusions appear if you skip thorough reference checks.

These contradictions erode domain credibility, often stalling or lowering authority during Google’s quarterly reviews.

Align and verify information across all related content to protect your reputation.

Assuming Authority From Mentions

Listing organizations, publications, or experts without direct citations (like links or official documents) invites flags for manipulative or shallow content.

Google’s E-E-A-T framework prioritizes verifiable information; unsupported references can hurt rankings, especially in competitive fields.

Only cite authority sources you can directly validate with traceable evidence.

Unlike most guides, which assume that simply naming reputable entities is enough to boost credibility, this approach insists on using only directly verifiable mentions to align with Google’s evaluation criteria.

Neglecting User Signals That Reveal Inauthenticity

Superficially humanized AI content fails to earn trust, and users spot inauthenticity in behavioral patterns.

Session duration and conversion rates drop into clear warning ranges when content lacks credibility; for instance, average session duration drops below 45 seconds on these pages.

Ignoring these user-driven metrics limits organic reach and erodes site authority over time.

High Bounce Rates on Key Pages

Pages with AI-humanized content and DA 10-30 routinely post bounce rates above 70%.

Users leave fast because the content doesn’t meet their needs, typically due to repetitive or generic language.

Search engines use data from Google Analytics and Chrome to flag these patterns.

When bounce rates stay high for 2-6 weeks, rankings drop.

Low Engagement With Calls-to-Action

Click-through rates on calls-to-action below industry standards—less than 1% for informational pages—show the page lacks credibility.

When AI-generated content misses nuance and context, emotional impact drops and CTAs underperform.

Monitoring CTA engagement right after content changes pinpoints where failed humanization stalls conversions.

Consistent Negative Feedback Patterns

A steady trend of negative feedback—repeated survey responses with terms like ‘robotic,’ ‘unnatural,’ or star ratings below 3.0—signals chronic problems from failed humanization.

When these patterns continue for a month or more, feedback makes it clear: language choices destroy trust.

You must track and fix these issues to recover.

Stagnant or Declining Natural Link Growth

Sites with flat or dropping referring domain numbers in Ahrefs or SEMrush over 60-90 days lose organic link momentum.

This slide happens because readers and publishers ignore AI-personalized pages they see as generic.

Early action when these trends appear prevents long-term damage to authority and rankings.

Ready to boost your organic reach by instantly transforming AI content into natural, human-like writing with zero detection risk—no expertise required? Start Humanizing Your Content

January 31, 2026

January 31, 2026

January 29, 2026

January 29, 2026